The realm of robotics is rapidly expanding its reach, venturing into the fascinating and complex domain of cooking. Cooking robotics promises to revolutionize food preparation by automating complex tasks that have traditionally been performed by human chefs. Before we envision robotic chefs whipping up Michelin-starred meals, let’s look at the current state of this technology and its potential impact.

Cooking robots represent a fusion of robotics, artificial intelligence, and culinary arts. Building effective cooking robots requires a sophisticated blend of these technologies to handle the complexities of ingredient manipulation, recipe execution, and culinary creativity.

The Essential Toolkit: Core Technologies for Robotic Chefs

Robotic Manipulation Systems

At the heart of cooking robots are robotic manipulation systems comprising robotic arms, grippers, and end-effectors capable of handling ingredients, utensils, and cookware with precision and dexterity. Key components include:

Robotic Arms: These are the workhorses of a cooking robot, mimicking human chefs’ movements with multiple degrees of freedom. High precision and a wide range of motion is crucial for tasks like stirring, grasping ingredients, and manipulating utensils. Advances in lightweight materials and high-performance actuators are constantly improving dexterity and efficiency.

Grippers and End-Effectors: Grippers are special devices designed to help robots handle objects in the real world. Grippers and end-effectors enable robots to grasp, lift, and manipulate objects of varying shapes, sizes, and weights. Some grippers look just like hands, while others look like a claw or hand with two or three fingers. Some grippers look like a soft round ball while some have magnetized tips. Robotic grippers are mostly categorized by their power source. They receive their power in different ways, from electrical to pneumatic (air) and hydraulic (hydraulic fluid). Specialized grippers with varying designs are used for different tasks:

- Vacuum Grippers: Vacuum grippers are popular since they can be used to handle a variety of parts and materials without damage. These grippers use suction cups made of either rubber or polyurethane and compressed air. Ideal for smooth, lightweight objects like leafy greens or plastic wrap.

- Soft Grippers: Grippers made from soft elastomers can passively and gently adapt to their targets allowing deformable objects to be grasped safely without causing bruise or damage. The properties of a soft gripper are defined by the morphology of its fingers and their actuation method. They are required to conform to the shape of the object to enable delicate handling of fruits, vegetables, and fragile items. Some robot grippers use granular materials such as coffee beans or plastic beads that are encased in a soft outer shell to grip and hold the item.

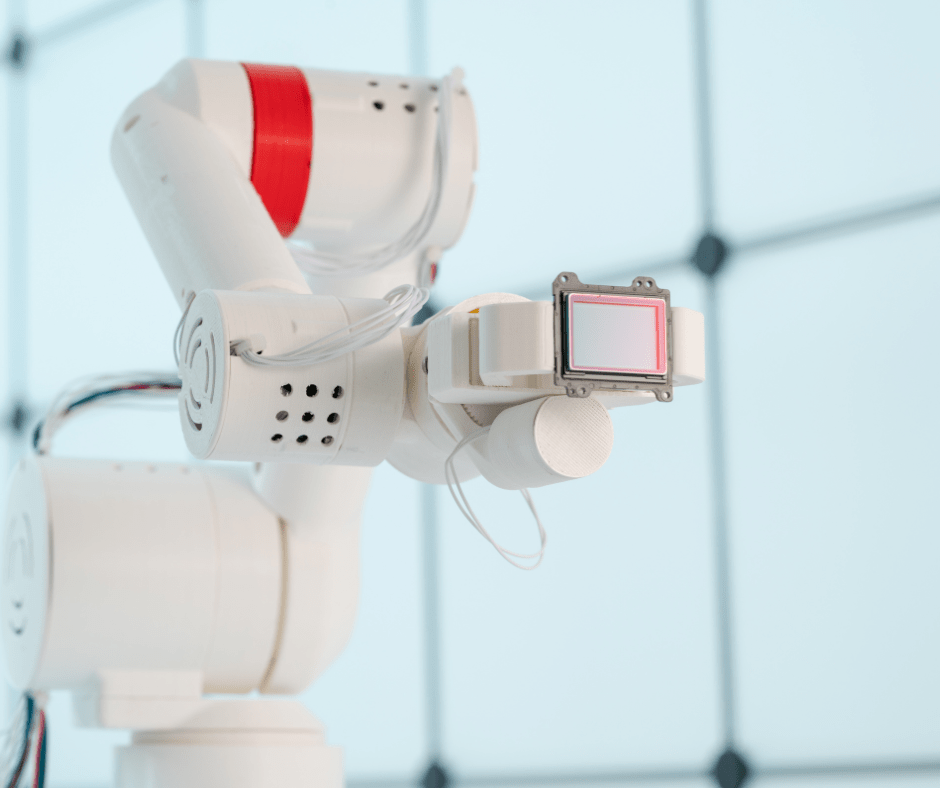

- Grippers with Embedded Sensors: Various sensor technologies are now available that can give dumb grippers the feedback necessary to become smart. Tactile sensors and force sensors are two main types of sensors ideal for a gripper designed to handle delicate food items. Tactile sensors mimic human touch and can provide information about the pressure, texture, and slip of the object being grasped. Force sensors measure the amount of force being exerted by the gripper on the object. Strain gauge sensors measure the strain (deformation) caused by the force applied to the sensor. They are a versatile and reliable option for measuring gripper force.Piezoelectric sensors generate a voltage proportional to the force applied. They offer high sensitivity and fast response times, making them suitable for dynamic grasping tasks. The location of the sensors within the gripper significantly impacts the data they provide. The sensor data needs to be processed and interpreted by a control system to determine the appropriate grip force for the specific fruit being handled.

Vision Systems

Creating a vision system for a cooking robot to handle delicate food items requires careful consideration of perception sensors and technologies that can provide accurate, real-time feedback while ensuring gentle handling of the ingredients.

High-Resolution Camera is the primary sensor for your vision system. Cameras with adjustable focal lengths and zoom capabilities allow for close-up views of ingredients and the surrounding environment without physical proximity, minimizing the risk of damage. High-resolution cameras provide visual feedback for object recognition, pose estimation, and quality assessment during ingredient handling and cooking tasks.

Depth sensors help the robot assess the spatial arrangement of delicate food items and determine their position and orientation relative to the robot’s gripper. Integrated depth sensors such as LiDAR (Light Detection and Ranging) or structured light cameras provide depth information for accurate 3D perception. Distinguishing between different ingredients in a cluttered scene is crucial for tasks like picking out vegetables from a chopping board. Understanding the 3D structure of the environment allows for precise grasping and manipulation of objects. LiDAR (Light Detection and Ranging) uses lasers to create a 3D point cloud of the environment. It’s robust to varying lighting conditions but can be more expensive than other options. Time-of-Flight (ToF) Camera measures the time it takes for light to travel to an object and back. It provides good depth resolution at a lower cost compared to LiDAR.

Machine Learning and Processing Power

Building a cooking robot involves integrating various machine learning algorithms and software components to enable tasks such as recipe understanding, ingredient recognition, motion planning, and adaptive control.

Machine Learning Algorithms

- Natural Language Processing (NLP) Algorithms: NLP algorithms can be used to parse and comprehend recipe instructions, ingredient lists, and cooking procedures. Techniques such as named entity recognition (NER), part-of-speech tagging (POS tagging), and syntactic parsing can be employed to extract relevant information from textual recipes.

- Object Recognition (Convolutional Neural Networks – CNNs): These algorithms are crucial for enabling the robot to identify ingredients, utensils, and appliances in the kitchen environment. By analyzing camera images, CNNs can learn to distinguish between different objects with high accuracy. Pose estimation algorithms can estimate the position and orientation of ingredients and utensils relative to the robot’s gripper. These algorithms enable the robot to plan and execute precise grasping and manipulation tasks.

- Image Segmentation (Fully Convolutional Networks – FCNs): Not only does the robot need to identify objects, but it also needs to differentiate between multiple instances of the same object. For example, segmenting a bowl of strawberries to identify each individual fruit. FCNs excel at this task, allowing the robot to understand the scene’s spatial layout and grasp each fruit precisely.

- Action Recognition (Recurrent Neural Networks – RNNs): For robots to learn and replicate cooking tasks, they need to understand the sequence of actions involved. RNNs, particularly Long Short-Term Memory (LSTM) networks, can analyze video demonstrations of cooking processes and learn the temporal dependencies between actions.

- Reinforcement Learning: Reinforcement learning algorithms can be used to train the robot through trial and error, allowing it to learn optimal grasping strategies for different objects through simulated or real-world interactions through techniques such as deep Q-learning and policy gradient methods. They can be used to generate optimal trajectories for the robot’s manipulator to perform cooking tasks efficiently and safely. RL algorithms are also used for adaptive control of the robot’s actions based on sensory feedback. By learning from past experiences and adjusting its behavior in real-time, the robot can adapt to changes in the environment and handle unforeseen situations during cooking tasks.

Software Components

- Robot Operating System (ROS): ROS is an open-source, meta-operating system for robots. ROS provides a distributed framework of processes (aka Nodes) for integrating various software components and controlling the robot’s hardware. It offers libraries, tools, and communication protocols for developing and deploying robotics applications, including perception, planning, and control modules. These processes can be grouped into Packages and Stacks, which can be easily shared and distributed. The ROS framework is easy to implement in any modern programming language. MoveIt! is a widely used motion planning framework for ROS that provides tools for kinematic and dynamic motion planning, collision detection, and trajectory optimization. It enables the robot to plan and execute complex manipulation tasks while avoiding collisions with obstacles in its environment.

- 3D Simulation Software (Gazebo, V-REP): Gazebo is a physics-based simulation environment that allows developers to simulate and test robotic systems in a virtual environment. It provides realistic simulations of robot dynamics, sensors, and environments, enabling developers to validate and debug their algorithms before deploying them on real hardware.

- Machine Learning Framework (TensorFlow, PyTorch): These frameworks provide the foundation for building, training, and deploying machine learning models. They offer tools for data processing, model optimization, and integration with other software components. Machine learning algorithms require a large amount of labeled data for training. This includes images of ingredients, utensils, and different stages of cooking tasks. Building a robust dataset is crucial for achieving good performance. For a smooth kitchen experience, the robot should be able to understand and respond to human instructions. This might involve using natural language processing (NLP) algorithms to interpret spoken commands or gestures.

- Motion Planning Software: This software helps the robot plan its movements to achieve specific goals. It considers the robot’s physical limitations, obstacles in the environment, and the desired task to generate safe and efficient motion trajectories.

- Robot Hardware Drivers: Interface with the robot’s hardware components, such as actuators, sensors, and end-effectors, is essential for controlling the robot’s movements and gathering sensory data. Hardware drivers translate high-level commands from the software into low-level signals that control the robot’s actuators and sensors.

Safety protocols are paramount in any kitchen environment. The software needs to be designed to prevent accidents and handle unexpected situations gracefully. This might involve implementing fallback mechanisms and error correction routines. By integrating these machine learning algorithms and software components, developers can build a robust and intelligent cooking robot capable of understanding recipes, recognizing ingredients, planning, and executing cooking tasks, and adapting to changes in its environment. This interdisciplinary approach combines expertise in machine learning, robotics, and software engineering to create a versatile and autonomous cooking system.